The following is a rough summary of how I've setup my Ubuntu file system, including some links to resources I found useful.

I have 3 hard-drives, a 500gb drive for the system and "non-critical" files, and two 300gb drives to hold "valuable" data. "Valuable" data includes documents, photos, source-code, music and some videos, less valuable "non-critical" data includes recorded TV programs, movies and so on.

On my Windows XP system I was using a RAID-1 setup to automatically mirror my data drive to protect against hard-drive failure. Using a RAID-1 array keeps everything nice and simple, but under Ubuntu I decided that the tools were more powerful and I would be able to "manually" mirror the data to gain a little more control. In particular, while I want a copy of the data in case of hard-drive failure, a slightly delayed backup in case of catastrophic user error would also be very valuable.

So, each drive is mounted separately, and the 500gb drive is partitioned to give some space for the system and the majority for "stuff" (videos, backups etc.)

I highly recommend partitioning the drives before/during installation, as while it was possible to move everything around, it was time consuming and fiddly. I used a Gparted LiveCD to split my main drive to get a separate /stuff partition, but this took forever (about 15 hours, and my system isn't slow...). I used the GParted LiveCD because this tutorial on installing WinXP on a Linux system suggested that the version of GParted on the Ubuntu LiveCD wouldn't work properly.

This guide on moving /home to a separate partition was helpful, though I think I just used cp -a to copy the files, rather than the complicated find | cpio command..

Once I had it all up and mounted, I created a script to run rsync to mirror the drives:

#!/bin/bash

date >> /home/tom/backup_mirror_home.log

rsync --archive --delete --stats /home/ /homeMIRROR/ 2>&1 >> /home/tom/backup_mirror_home.log

This was added in as a cron job to run every 3 hours, which should be often enough (sudo crontab -e):

# m h dom mon dow command

0 */3 * * * /home/tom/backup_mirror_home.sh

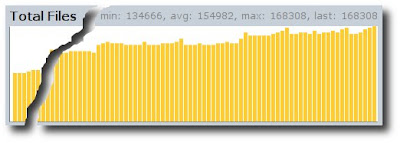

The rsync process took a while to copy the 200gb or so across the first time, but is very fast (probably less than a minute) when just synchronizing the existing files as most data on the drive is photos and music that don't change very often.

A useful trick to keep an eye on the progress of the copy operations is watch df. df shows the current usage on the various disks, and watch will run it every 2 seconds so you can watch the percentages change slowly and be convinced something is actually happening.

Once all that was done, I setup sbackup to backup to the /stuff drive and an external USB drive.

My actual process was a little more complicated than described above, as the 300gb drives already contained NTFS partitions with the critical data on them and I wanted to move it over to ext3 while at no point having less than 2 copies of the data. The steps were roughly as follows:

- Mount ntfs drive (/dev/sdb), copy data to 500gb drive (/dev/sda)

- Format /dev/sdb as ext3, copy data from /dev/sda to /dev/sdb.

- Move /home to /dev/sdb, and reorganise it a bit (rename/move folders/files about.)

- Format /dev/sdc as ext3, mirror from /dev/sdb